Proxmox

Proxmox, specifically Proxmox Virtual Environment (PVE) is a type-2 hypervisor built on a customized Ubuntu kernel. Proxmox uses Kernel-based Virtual Machines (KVM) and LXC to provide various virtualization and containerization functions. Proxmox is considered enterprise grade software, as such it has many features meant for large organizations such as; LDAP based authentication, hardware clustering, high availability, live migration, robust backup solutions, and many more. One of the best features of proxmox is its licensing model. For typical use; testing, home lab, development, or enterprise Proxmox is completely free to use. A paid subscription is available with additional support, the kind most enterprises expect with access to the enterprise apt repository which prioritizes; security, stability, and compatibility over bleeding edge.

Even though its free software, Proxmox has a thriving community and great support through the Proxmox Support Forum. This is the main form of support without a subscription, but there’s tons of content across the internet for proxmox due to its popularity. All of these factors make proxmox a fantastic choice for the home lab. This guide will provide just enough information to get started with proxmox.

Prerequisites

- Reliable 2GB or larger USB drive

- Intel or AMD x86-64 CPU with virtualization optimizations

- 4GB or more RAM, at least 2GB is required for Proxmox itself the rest is for the guest VMs

- 128GB or larger Solid State Drive

- Internet connection

- A local network with a firewall

- Another working computer with a modern web browser on the same network

Hardware for Proxmox

To effectively use Proxmox there are some specific hardware requirements, but it is possible to find hardware that allows one to use proxmox to its full potential while keeping things relatively inexpensive. The most important hardware consideration is virtualization optimizations, usually marketed as Intel VT or AMD-V. Hardware that supports these instructions can run virtual machines with additional features and enhanced performance. Proxmox also only works with 64 bit processors, but that’s the default with all modern Intel and AMD x86 Desktop CPUs. Many times full support for CPU features also requires a compatible motherboard, checking the motherboard’s manual is a good start to verify feature support.

Proxmox doesn’t require but does benefit from enterprise/business/workstation grade hardware, for instance hardware RAID, ECC memory, battery backup, high RPM enterprise/data center hard drives, server grade CPUs, and other similar-grade features. It is recommended to have at least a battery backup to ensure data integrity during power outages or fluctuations. Solid state drives can also provide large jumps in performance, but ensure you have proper backups or redundancy as a SSD failure is always sudden and inevitable.

PCIe port configuration can also be vastly different by motherboard and CPU choice. What is present physically can be different from what will actually work. For example, a core i3 from Intel on a mid range motherboard might have two physical x16 slots and two M.2 ports but its supported configurations might be something like,

| # | Physical | Electrical |

|---|---|---|

| 1 | PCIe x16 | PCIe 4.0 x16 |

| 2 | PCIe x16 | PCIe 3.0 x8 |

| 1 | M.2 | PCIe 3.0 x4 |

| 2 | M.2 | Not enough PCIe lanes on the CPU |

or

| # | Physical | Electrical |

|---|---|---|

| 1 | PCIe x16 | PCIe 4.0 x16 |

| 2 | PCIe x16 | Not enough PCIe lanes on the CPU |

| 1 | M.2 | PCIe 4.0 x4 |

| 2 | M.2 | Not enough PCIe lanes on the CPU |

The same motherboard with an i7 might look like this

| # | Physical | Electrical |

|---|---|---|

| 1 | PCIe x16 | PCIe 4.0 x16 |

| 2 | PCIe x16 | PCIe 3.0 x4 |

| 1 | M.2 | PCIe 4.0 x4 |

| 2 | M.2 | PCIe 3.0 x4 |

or

| # | Physical | Electrical |

|---|---|---|

| 1 | PCIe x16 | PCIe 4.0 x16 |

| 2 | PCIe x16 | Not enough PCIe lanes on the CPU |

| 1 | M.2 | PCIe 4.0 x4 |

| 2 | M.2 | PCIe 4.0 x4 |

These configurations are completely fictional but show a common pattern in the real world. PCIe lanes are useful for hypervisors so pay close attention to the hardware’s compatible PCIe configurations.

Multiple ethernet ports or network interface cards (NIC) can help with complex network setups, high bandwidth requirements, high availability, and improved security. Separating VM and Proxmox traffic between multiple ports can ensure the guest VMs have more bandwidth in situations where such a thing is priority. Multiple ports can also ensure uptime by providing alternate routes in case one or more ports go down. If you’re planning on running a router as a VM having at least two additional network ports is a must!

Considering virtualization is basically running many computers within a computer it is paramount the underlying hardware is of good condition and stable. For instance a CPU or motherboard that was damaged by extreme overclocking, RAM overclocked beyond its manufacturer’s recommendations, storage medium with existing sector errors, and other similar signs of abuse will guarantee exponentially complex issues as more VMs are active at once. Used hardware is an inexpensive method to get started, but ensure the hardware

is stable and performs to its manufacturer’s specifications.

BIOS configuration

Proxmox is installed directly on metal

, therefore its important to check the BIOS settings and make sure everything is optimized for running a hypervisor. Intel and AMD have their own unique marketing terms for virtualization optimizations but to make matters worse these terms can be different based on CPU architecture or motherboard manufacturer. The best solution is to search online the exact model of CPU and motherboard followed by, virtualization bios settings

. In most cases the manual for the motherboard will have enough information and help with deciphering other BIOS settings. If the motherboard + CPU combo doesn’t have any virtualization optimizations, its possible to run Proxmox with some guest operating systems but many advanced features such as PCI passthrough will not work, and overall performance will be lower too.

In addition to virtualization optimizations consider changing the power related options. Most BIOS have the option to decide what happens after a power loss event. For a server the most useful option would be to always start up after power returns, but to save power and prevent continuous restarts the always off option can be used. Regardless, any server should be protected by a battery backup. To ensure maximum performance look for power saving related settings in the BIOS and either disable them or set them to the setting which ensures the least power savings. For motherboards that provide overclocking settings its safe to enable factory overclocking features, especially ones supported by the CPU manufacturers. Custom overclocking and pushing beyond manufacturer recommendations is not recommended as servers should prioritize stability over performance.

Motherboard based RAID options are useful but for most consumer motherboard these RAID options are more trouble than they are worth. Some high end or server grade motherboards will have advanced RAID capability using popular RAID controllers such as Broadcom/LSI, but in general its easier to find and use inexpensive RAID controller PCIe expansion cards. Using NVME based SSDs might require additional settings to be enabled on the motherboard, the manual will have additional information. PCIe configurations in the BIOS can help ensure your PCIe expansion devices are working as expected.

For additional security motherboard based password and encryption settings can help protect BIOS settings, and provide an additional layer of security for the virtual machines running within proxmox. Encrypting your root or main drive from the BIOS adds security but recovering from a motherboard failure will be impossible without a compatible replacement board and the encryption key.

Secure Boot

Unfortunately secure boot on the host doesn’t always work well with Proxmox, in most cases its recommended to be disabled. Its possible to get secure boot working with proxmox with some additional work, but its not officially supported by Proxmox so finding guidance when something breaks is harder than usual. It is possible to use secure boot on the virtual machines running within proxmox.

Create USB Installer

- Download the latest Proxmox iso from: https://proxmox.com/en/downloads/category/iso-images-pve

- Download one of the popular bootable USB creation tools from the list below

- Select the downloaded bootable ISO from earlier

- Select the drive you want to write to, this will erase everything on the selected drive

- Start the writing process, grab some water while you wait

Install Proxmox

- Insert the USB into the computer that’s going to run Proxmox

- Enter the motherboard’s boot selection menu

- Select the install USB to boot from

- Select

Install Proxmox VE

- The

No support for KVM virtualization detected

message means either the hardware doesn’t support virtualization optimizations, or the BIOS isn’t configured properly.6. Select the disk to install to, use the whole disk if possible7. Create a strong password, and use a valid email if you want to receive notifications and password resets - Make sure a valid network interface is selected, and provide details about the network proxmox will connect to. Make sure the IP is properly reserved on the router. Host name needs to be a FQDN, but anything will work.

- Review the install settings, make sure automatically reboot is selected then click the install button.

- Use the progress bar to track the install.

- The system will automatically reboot, you might get a message about how to access the web interface before the reboot.

- Once Proxmox has fully started the proxmox command line interface should be waiting for a login, and present information on how to access the web interface.

Access Proxmox

Even though Proxmox is built on top of Debian and has a CLI, the primary method for creating and managing virtual machines is through the Proxmox Web UI. On a default install, the Web UI can be access using the IP address selected during install and the default port of 8006. For instance, the example setup from before would be accessed at, https://10.10.3.11:8006

The default login user name is root

and the password was setup during the install process. Proxmox supports creating users with various permissions, and other authentication methods that can improve security. For the sake of this exercise the default settings are good enough, but should never be used in a production environment. Proxmox also supports multi factor authentication methods and should absolutely be used in a production environment.

The free version of Proxmox will show a No valid subscription

pop up message, this can be safely ignored as a license is not required to use Proxmox.

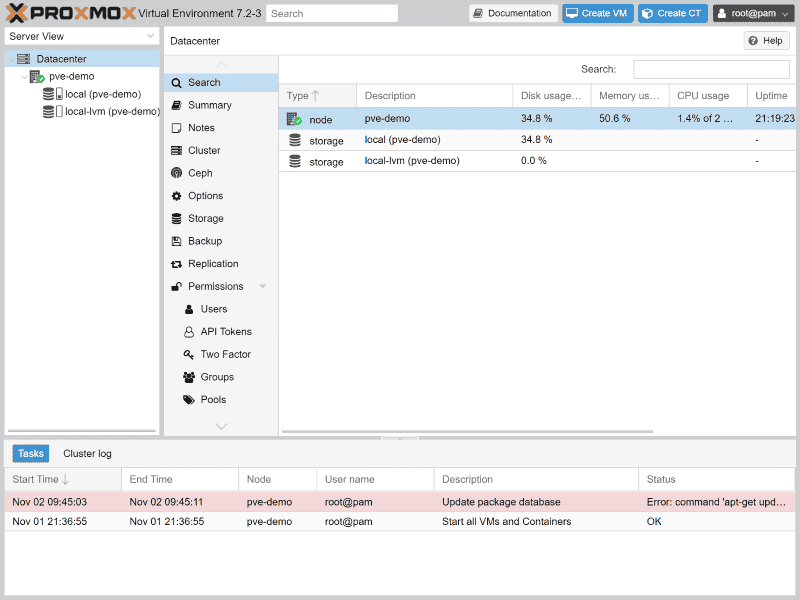

Proxmox Web UI

Proxmox’s Web UI is meant to allow administrators to easily manage many proxmox nodes and the many virtual machines running on them. The Web UI is more than enough to do common large scale virtualization tasks including but not limited to; migrate virtual machines between physical nodes, backup virtual machines, setup storage clusters for virtual machines, define and manage virtual networks, high availability and disaster recovery modes, authentication and permissions, and data center monitoring. Few things require CLI access, and Proxmox is always adding new features to the Web UI. As of November 2022, the two most common tasks I’ve had to through CLI are passing through individual storage drives from the host to a virtual machine and modifying kernel settings for IOMMU and GPU passthrough support.

Create a Virtual Machine

A Virtual Machine is a full analogy of a physical computer, creating one in Proxmox is very similar to setting up a physical computer.

-

Start by clicking on the

Create VM

button on the top right of the Proxmox Web UI2. This will open theCreate: Virtual Machine

dialog, make sure to check theAdvanced

box at the bottom right to see the additional options.- Node

- If you have multiple Proxmox nodes, this drop down will let you select which node the virtual machine will be created on.

- VM ID

- A unique numeric ID for the virtual machine, the ID is unique across all nodes.

- Name

- A recognizable alpha numerical name for the virtual machine.

- Resource Pool

- Add the virtual machine to a Resource Pool, which are useful for controlling permissions and access to various elements within Proxmox.

- Start at boot

- Check this to have the virtual machine start when the host boots or reboots.

- Start/Shutdown order

- Control the sequence of how virtual machines which are set to start at boot, start up when the host is booted. Smaller numbers will start before larger numbers.

- Startup delay

- Delay the virtual machine by this number of seconds. Default is 0.

- Shutdown timeout

- Force kill a virtual machine if it has not gone offline after sending a shutdown command for the set number of seconds. By default the timeout is 3 minutes.

-

The next screen sets up the type of operating system that will run on the virtual machine, and if needed either the virtual or physical disc drive required to install the operating system .

- Use CD/DVD disc image file (iso)

- Select an operating system installer iso from local or remote storage pools.

- Use Physical CD/DVD Drive

- Pass through a physical disc drive from the host to the virtual machine, this will need to be setup after the virtual machine has been created.

- Do not use any media

- Skip all disc drive setup for another option such as an existing virtual drive with an operating system.

- Guest OS

- Select the operating system type and version that’s closest to the operating system the virtual machine will run.

-

The next screen sets up the guest system settings

- Graphic Card

- Select the type of virtual graphics card, leaving this as

Default

works in most cases. - Machine

- The default option

i440fx

works for most scenarios, but changing toq35

is required for PCIe passthrough from the host to the virtual machine. - BIOS

- The default

SeaBIOS

should work for most cases, butOVMF (UEFI)

might be required for some modern operating systems. - Add EFI Disk

- If

OVMF

is selected, a separate disk or volume is required to store the EFI data, checking this options will create that disk during virtual machine setup. - EFI Storage

- Selects the host storage volume the EFI disk will be created on.

- Pre-Enroll keys

- Enables

Secure Boot

and includes keys for some popular Linux distros and Microsoft Windows. - SCSI Controller

- The SCSI controller provides most of the default virtual storage access on the virtual machine.

VirtIO SCSI

is the recommended controller type for high performance on modern operating systems. - Qemu Agent

- Checking this lets the host know the virtual machine does or will eventually have the qemu agent installed. This software provides additional capabilities between the host and guest, features such as shutdown and snapshots are improved.

- Add TPM

- Create a virtual

TPM

device for the virtual machine. Some modern operating systems require this device. - TPM Storage

- Selects the host storage volume the TPM data will be stored on.

- Version

- Select the specific TPM version to use, in most cases older versions are not recommended.

-

The next screen sets up the virtual disks for the virtual machine. The setup defaults to a single scsi disk drive on scsi0, but additional drives can be added now if needed.

- Bus/Device

- Select the type of disk drive; IDE, SATA, VirtIO Block, or SCSI and the bus it uses. Different types of drives have different capabilities. SCSI ensures the most compatibility and highest performance.

- Storage

- Selects the host storage volume the virtual drive will reside on.

- Disk size (GiB)

- The size of the virtual disk drive in Gibibytes.

- Cache

- Select the cache model for the virtual drive, no cache is fine for most cases but there are other options.

- Discard

- A SSD specific option, runs the process to clean up blocks of deleted data. Most know this as TRIM, the guest operating system must support TRIM for discard to be effective.

- SSD emulation

- Presents the drive as a Solid State Drive to the guest operating system.

- IO thread

- Only applicable to VirtIO and SCSI drives, creates a dedicated thread for each controller. Can increase performance when multiple drives are spread across multiple controllers.

- Read-only

- Makes the drive read only, will cause issues if an operating system needs to be installed on the drive.

- Backup

- Includes this virtual drive if the virtual machine is being backed up.

- Skip replication

- Skips this drive for storage replication. Replication keeps a copy of the drive across nodes for the sake of data redundancy and reduced virtual machine migration times.

- Async IO

- Options for how QEMU handles asynchronous IO on the host, io_uring is a new and recommended option.

-

The next screen sets up the CPU(s) for the virtual machine.

- Sockets

- Analogous to physical CPU sockets. Some software use this for licensing.

- Cores

- Analogous to cores on physical CPUs. In terms of virtual machine performance there’s no difference between sockets and cores on ths configuration page. Four sockets with one core each, two sockets with two cores each, and one socket with four cores each will all perform the same. The number of sockets and cores do not need to be the same or less than the number of sockets and cores on the host.

- Type

- Select a specific CPU generation or architecture. Generally newer generations and architectures provide new features. Selecting a type that’s closest to the host’s CPU typically provides better performance and compatibility. Selecting

host

will enable the exact same features as the host’s CPU.KVM64

is the default and provides the best compatibility especially when physical Proxmox nodes have varying hardware. - VCPUs

- Controls the number of CPUs or cores the virtual machine has access to out of the total number defined using Sockets and Cores above. For instance on a one socket, four core virtual machine the VCPU setting of three will start the virtual machine with three out of four cores enabled.

- CPU limit

- A floating point number that corresponds to the percentage of CPU time the virtual machine can use on the host. A value of 1.0 is equal to 100% CPU time on the host. A single process using a single core would be 100% CPU usage, where as a single process using four cores would be 400%. This can be used to limit how much of the overall CPU a virtual machine can use independent of how many sockets and cores setup earlier.

- CPU units

- The relative

weight

of the virtual machine against other virtual machines on the host. All virtual machines default to a value of 1024. A larger value means the virtual machine will be prioritized by the scheduler, while a lower a value will lower the virtual machine’s priority on the host. - Enable NUMA

- If the host hardware is in a NUMA configuration, enabling this setting will ensure Proxmox will arrange resources so that virtual machines use cores and memory on the same physical socket.

- Extra CPU Flags

- Use this area to further customize CPU capabilities. In general these settings shouldn’t be changed.

-

The next screen sets up the memory or RAM for the virtual machine. Similar to the CPU section, the values here don’t have to be less than what’s available on the host. Nevertheless, the impact of switching from main memory to Swap is a costly process. Therefore, its always better to over provision the total memory on the host. It is also important to remember Proxmox itself will need at least 1GB of ram.

- Memory (MiB)

- The total memory/RAM available on the virtual machine from the host in Mibibytes

- Minimum memory (MiB)

- The lowest amount of RAM the virtual machine will use on the host. This is related to the

Ballooning Device

setting below. - Shares

- Similar to the

CPU Units

setting, this is a way to define the virtual machine’s memory allocation relative to other virtual machines who have ballooning fully enabled on the host. A larger number reserves more ram for the virtual machine. - Ballooning Device

- Dynamically allocates RAM from the host based on how much RAM the virtual machine is using. The

Minimum memory

setting above defines the lowest and initial amount of RAM the virtual machine will use on the host. Leaving this setting on even if theMinimum memory

is set to the same value asMemory

is useful as it enabled additional features to monitor virtual machine memory usage.

-

The next screen sets up the network device for the virtual machine, if desired.

- No network device

- Not all virtual machines need access to the network, checking this box will skip adding a network device.

- Bridge

- Select the network bridge from the host this virtual machine will use.

- VLAN Tag

- If using VLANs on the network and the host is VLAN aware, define the VLAN the virtual machine’s network will use.

- Firewall

- Check this to use the Proxmox Firewall for this virtual machine.

- Model

- Select from a few different network interface cards,

VirtIO

should be selected for maximum performance on a modern operating system. - MAC address

- Automatically generates a random MAC Address, can also be manually entered in the format aa:bb:cc:dd:ee:ff

- Disconnect

- Creates the network device, but doesn’t connect it to the network. Similar to unplugging the ethernet cable on a physical computer.

- Rate limit

- Set the maximum data rate of the network connection.

- Multiqueue

- When using the

VirtIO

driver, a virtual machine with multiple cores can use the additional cores to process network packets faster. In addition, the number of multipurpose channels on each virtual network card must be set to the number of cpu cores using ethtoolethtool -L ens1 combined Xwhereens1

is the virtual network card, andX

is the number of cpus on the virtual machine.

-

The final screen will show a summary of the options selected earlier. At the bottom there’s an option to boot the virtual machine as soon as its ready.

Start And Stop

After initial setup, the virtual machine will appear in the tree under Server View

. There will be a lock over the virtual machine’s icon on this screen while it is being setup. Once the lock does away and a gray monitor icon is left the virtual machine is ready to be started. If Start at boot

was selected before, the icon will turn green indicating the virtual machine is running.

The virtual machine state controls are on the top right of the screen, below the button used to create the virtual machine. Click the Start

button to start the virtual machine. This button will be disabled if the virtual machine is already running, or if there’s something preventing the virtual machine from starting.

After a virtual machine has entered the started state, the Shutdown button and dropdown are enabled. Shutdown will attempt to cleanly shut down the virtual machine as if a shutdown

were executed. Reboot

, Pause

, and Hibernate

correspond to reboot

, sleep

, and hibernate

on most modern operating systems. Stop

and Reset

are like pressing the hardware power and reset buttons, these commands will attempt to suddenly kill the virtual machine.

Console

With the default setup, and everything working as expected Proxmox will provide a virtual view into the virtual machine’s video output. The Console

button will open a new window with the virtual machine’s video output.

Conclusion

That’s the basic steps to get started with Proxmox, but one virtual machine isn’t enough. The true power of Proxmox becomes apparent when multiple virtual machines used and even more when multiple nodes are used. There are plenty more advanced capabilities that will be covered in later posts.